What is AIXI? — An Introduction to General Reinforcement Learning

Jan Leike, December 2015

This is a short introduction to a mathematical theory of artificial general intelligence that is meant to be accessible without much prior knowledge in mathematics or machine learning.

Reinforcement Learning

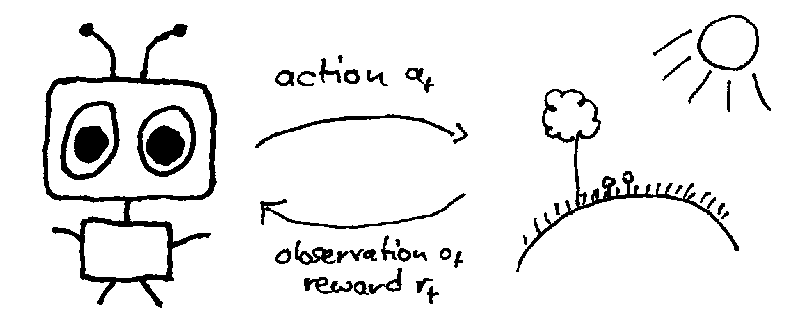

In reinforcement learning an agent (e.g. a robot) interacts with an environment (the space the agent lives in). For the time steps \(t = 1, 2, \ldots\), the agent takes an action \(a_t\) (moving its actuators/limbs) and receives a percept \(e_t = (o_t, r_t)\) consisting of an observation \(o_t\) (sensory input like a camera image) and a numeric reward \(r_t \in \mathbb{R}\). You can think of rewards as treats for the agent; the larger the number, the better the treat. We usually assume that rewards are bounded between 0 and 1 (mostly for technical reasons).

The goal is to maximize total rewards \(\sum_{t=1}^m r_t\) until some pre-specified time limit \(m\) is reached. It is important to emphasize that rewards are all that our agent cares about.

Sometimes we also want to consider all future rewards over all time steps; to avoid having to deal with infinities we choose a discount function \(\gamma: \mathbb{N} \to \mathbb{R}_{\geq 0}\) with \(\sum_{t=1}^\infty \gamma(t) < \infty\) that tells us how much we value rewards closer to the present relative to rewards further in the future. In this case we aim to maximize discounted rewards \(\sum_{t=1}^\infty \gamma(t) r_t\).

The agent interacts with the environment by taking an action \(a_1\), receiving a percept \(e_1\), then taking another action \(a_2\), and so on. This generates a sequence \(a_1 e_1 a_2 \ldots\) that we call history. At time step \(t\) the agent has taken \(t - 1\) actions and received \(t - 1\) percepts, so the history is

\[a_1 e_1 a_2 e_2 \ldots a_{t-1} e_{t-1}.\]We use the shorthand notation \(æ_{<t}\) to denote the history at time step \(t\). Generally, agent and environment may be stochastic, so this history is generated randomly; i.e., it is a sequence of random variables.

We describe what the agent does with a function \(\pi\) called policy that maps a history to an action that the agent takes after seeing this history. We describe what the environment does with a function \(\nu\) that maps a history and an action to a percept. Generally these mappings can be stochastic. Formally, denoting the set of actions with \(\mathcal{A}\) and the set of percepts with \(\mathcal{E}\), these functions are written as follows.

\[\pi: (\mathcal{A} \times \mathcal{E})^* \rightsquigarrow \mathcal{A}\] \[\nu: (\mathcal{A} \times \mathcal{E})^* \times \mathcal{A} \rightsquigarrow \mathcal{E}\](\(\,^*\) denotes the Kleene star and \(\rightsquigarrow\) a stochastic mapping.) Together these two functions define the whole interaction of agent and environment.

In classical reinforcement learning we make additional assumptions on the environment. One common assumption is the Markov assumption together with full observability, which means that the last percept the agent received contains all relevant information about the state of the environment. Another typical assumption is ergodicity, which guarantees that the agent can easily recover from any mistakes it made in the past.

These assumptions are true when training an agent to play ATARI video games and other problems that reinforcement learning is currently applied to. However, they are violated for a general AI acting in the real world: this environment is partially observable since not all relevant information is available to the sensors at all times and not ergodic because some mistakes are just impossible to recover from (such as driving off a cliff). What makes general reinforcement learning general is that we are not making these assumptions.

Known Environments

We start by supposing that we have full knowledge of the environment. In other words, we are given an environment function \(\mu\) (we always denote the true environment with \(\mu\) instead of \(\nu\)) and we are now trying to find the best policy \(\pi^*_\mu\) for this environment.

But what is the best policy for our agent? Remember that the only thing our agent cares about are rewards. In particular, the total sum of (discounted) rewards. So in order to evaluate the ‘value’ of a policy \(\pi\) to our agent, we use the following value function.

\[V^\pi_\mu(æ_{<t}) := \mathbb{E}^\pi_\mu \left[ \sum_{k=t}^\infty \gamma(k) r_k \,\middle|\, æ_{<t} \right]\]In other words, the value of policy \(\pi\) in environment \(\mu\) given the history \(æ_{<t}\) is the expected future discounted rewards when following policy \(\pi\) in environment \(\mu\) conditional on the history \(æ_{<t}\).

The value function plays a central role in reinforcement learning because it quantifies how desirable histories are. This lets us compare different histories and steer towards the histories that have higher value.

Using the value function, we can define the \(\mu\)-optimal policy \(\pi^*_\mu\) as any policy that achieves the maximal value in the environment \(\mu\):

\[\pi^*_\mu \in \arg\max_\pi V^\pi_\mu\]This is what our agent wants to do when acting in environment \(\mu\); it is optimal by definition. However, this does not tell us how to compute this policy. The matter of computation shall not concern us until the last section.

Unknown Environments

Usually we don’t know the true environment, and the challenging part of reinforcement learning is that you have to learn the environment while trying to collect rewards. This involves a tradeoff between exploration and exploitation. Exploration means trying new things and going into unknown parts of the environment and it has the potential to unlock better reward sources. But it incurs an opportunity cost because the agent could spent its time exploiting by collecting rewards in the known parts of the environment. If you explore too much you spend too little time exploiting and end up will less rewards, but if you explore too little you might not learn crucial parts of the environment and also end up with less rewards. It is not known what the optimal balance between exploration and exploitation is.

Here we take the Bayesian approach. Our environment is unknown, but we assume that it belongs to a known class of (countably) infinitely many environments

\[\mathcal{M} := \{ \nu_1, \nu_2, \ldots \}.\]We pick a prior probability distribution \(w\) over the class \(\mathcal{M}\) such that every environment is assigned a positive probability. The prior \(w(\nu)\) on the environment \(\nu\) quantifies how likely the agent thinks it is to be in environment \(\nu\) before the interaction starts.

For the general reinforcement learning agent AIXI (pronounce: Ike-see) the environment class \(\mathcal{M}_{comp}\) is the class of all computable environments (environments whose mapping \((\mathcal{A} \times \mathcal{E})^* \times \mathcal{A} \rightsquigarrow \mathcal{E}\) can be computed by a Turing machine). AIXI uses a universal prior \(w(\nu) := 2^{-K(\nu)}\) where \(K(\nu)\) is the length of the shortest description (Kolmogorov complexity) of the environment \(\nu\). This prior favors environments that have a simple description over environments that don’t have a simple description, in line with Occam’s razor.

The assumption that the true environment is in the class \(\mathcal{M}\) is crucial, but it is not a very strong assumption if the class \(\mathcal{M}\) is large. In particular, it is consistent with the current theories of physics that the class \(\mathcal{M}_{comp}\) of all computable (stochastic) environments is large enough to contain our own universe.

Having fixed the environment class \(\mathcal{M}\) and a prior \(w\), we get a Bayesian mixture \(\xi\) of all environments weighted by the prior:

\[\xi := \sum_{\nu \in \mathcal{M}} w(\nu) \nu\]AIXI’s policy (or: AI\(\xi\)’s policy) is the policy \(\pi^*_\xi\) that is optimal in the mixture \(\xi\). It is the Bayes-optimal agent according to the prior \(w\) maximizing \(\xi\)-expected rewards.

Optimality

Is AIXI the best agent? If you are a hardcore Bayesian, then you can skip this section; you’re already convinced that AIXI is the best agent. After all, it’s Bayes-optimal. For everyone else there’s bad news: we currently don’t know of any other nontrivial way that AIXI is optimal. Moreover, Bayes-optimality is highly subjective because two different Bayesians with two different universal priors could view each other’s AIXI as a very stupid agent. The reason is that there are bad priors like the dogmatic prior: this prior assigns high probability that the agent goes to hell (reward 0 forever) if it does not follow one particular dogma \(\pi'\). As long as the policy \(\pi'\) yields some rewards, the prior says that exploration would be too costly and AIXI does not dare to explore, thus never learns the truth about this belief.

The underlying problem is that a prior always introduces bias. In the passive setting such as sequence prediction where the agents takes no actions, the prior’s bias washes out as more data comes in and asymptotically vanishes. This is not true for the reinforcement learning setting.

So is this the end of general reinforcement learning? Luckily no. An objective notion of optimality is asymptotic optimality which states that asymptotically the agent acts according to the optimal policy in the sense that the value of the agent’s policy \(\pi\) converges to the optimal value in the true environment \(\mu\):

\[V^\pi_\mu(æ_{<t}) \to V^{\pi^*_\mu}_\mu(æ_{<t}) \text{ as } t \to \infty.\]AIXI is not asymptotically optimal because it does not explore enough. However, there are knowledge-seeking agents, special policies who explore optimally. If we add a knowledge-seeking component to AIXI to make it seek knowledge some of the time, the resulting agent asymptotically learns the true environment and increasingly acts Bayes-optimal, achieving asymptotic optimality.

But is asymptotic optimality a good notion of optimality? It has some obvious downsides. For example, it does not discourage the agent from getting caught in traps. Once you are in a trap, all actions are equally bad and hence your policy is optimal. Even worse: to be asymptotically optimal you have to explore everything and spring every trap because it might contain a hidden stash of rewards. Furthermore, asymptotic optimality is a poor measure for what we actually care about: rewards. We want rewards as soon as possible, but an asymptotically optimal agent learns to act optimally in the limit, after an arbitrarily long time has passed.

In summary, if you explore the Bayes-optimal amount you’re not objectively optimal, and if you explore more, you’re likely to end up in a trap. But maybe we shouldn’t be too harsh on our agents: in these general environments it’s just too hard to do well all the time.

Why General Reinforcement Learning?

We now return to a problem that we disregarded so far: computation. AIXI is incomputable and hence we cannot build it in the real world. There are practical approximations to AIXI that learn to play simple video games, but these approximations fall short of the full power of the model. So is AIXI a failed theory? That depends on what you expect.

We should not mistake AIXI for a prescriptive theory that tells us how superintelligent AI should be build. Instead, general reinforcement learning should be thought of as a descriptive theory that we can use to study superintelligent AI without building one. This is particularly useful as a tool to formally investigate AI safety, questions related to how to make superintelligent AI not kill everyone.

Notes and References

AIXI was developed by Marcus Hutter starting in the year 2000. The standard reference is his book which is highly technical and a bit outdated.

See also:

- Marcus Hutter’s own introduction to AIXI

- A formal introduction to AIXI and recent results

- An intuitive explanation of Solomonoff induction by Alex Altair (Solomonoff induction is a passive variant of AIXI using a universal prior for sequence prediction)

Acknowledgements

Thanks to Marcus Hutter and Freya Fleckenstein for feedback.